Why Conversion Rate Optimization in Webflow Is a Continuous System

Conversion rate optimization produces lasting results only when treated as a continuous feedback system, not a series of isolated tests. High-velocity iteration in platforms like Webflow enables teams to test 4–6x more hypotheses annually than traditional stacks, compounding learning and raising hypothesis success rates from 20–30% to 50–60% over time. The difference between stagnant and high-performing optimization programs isn't tooling, it's operational discipline: documented hypotheses, regular review cadences, and cross-functional collaboration that turns each test into input for better decisions. Teams building this infrastructure see 20–40% year-over-year conversion improvements while others plateau after initial gains.

Most marketing teams treat conversion rate optimization as a project with a finish line. They run a handful of A/B tests, pick a winner, and move on. This approach fundamentally misunderstands what conversion rate optimization actually is: not a series of isolated experiments, but a continuous feedback system that compounds learning over time.

The difference matters because one-off testing produces temporary lifts that plateau quickly. Systems produce compounding returns. Teams that build conversion rate optimization into their operating rhythm see 20–40% year-over-year improvement in key metrics, while teams that test sporadically see single-digit gains that fade within quarters.

Webflow's architecture enables this systems approach better than most platforms. Its visual development environment, flexible CMS structure, and lightweight deployment pipeline remove the bottlenecks that turn optimization into a slow, high-friction process. But velocity alone doesn't create a system. You need a repeatable structure for generating hypotheses, validating them, and feeding results back into planning.

This article explains why conversion rate optimization functions as a continuous system, how iteration velocity compounds learning, and what infrastructure teams need to sustain optimization at scale. We'll cover the mechanics of feedback loops, the role of qualitative and quantitative research, and real examples of how design iteration creates measurable business impact.

What Makes CRO a System Instead of a Campaign

A system has inputs, processing mechanisms, outputs, and feedback channels that inform future inputs. Conversion rate optimization becomes a system when you establish:

- Structured hypothesis generation: A repeatable method for identifying which elements to test based on user behavior data, session recordings, and friction analysis

- Fast iteration cycles: The ability to design, build, deploy, and measure changes in days or weeks, not months

- Learning documentation: A shared repository where test results, user insights, and behavioral patterns are stored and referenced for future decisions

- Cross-functional participation: Regular input from design, development, content, and analytics teams to ensure hypotheses reflect multiple perspectives

- Metric accountability: Clear ownership of conversion goals with weekly or bi-weekly review cadences that force prioritization

When these components work together, optimization becomes self-reinforcing. Each test produces data that improves the next hypothesis. Velocity increases as teams develop pattern recognition around what works. The cost per insight decreases as infrastructure matures.

Most teams fail to build this structure because they confuse testing tools with testing systems. Buying an A/B testing platform doesn't create a feedback loop. It creates the capacity to run experiments. The loop requires discipline: pre-defined review cycles, documented learning, and a team that treats optimization as core work, not a side project.

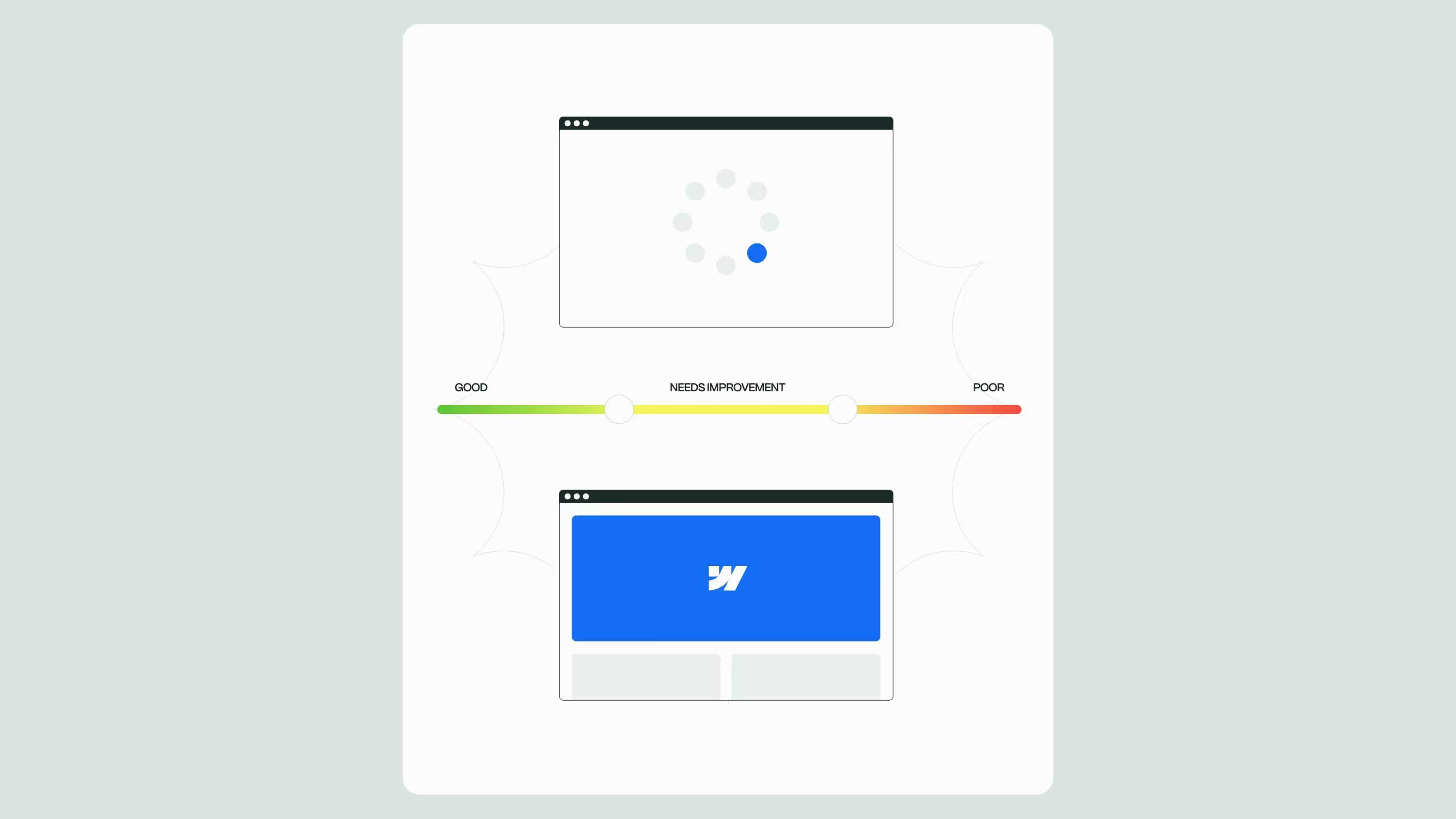

Why Webflow Enables Higher Experimentation Velocity

Platform architecture determines how quickly teams can move from hypothesis to deployed test. Webflow's visual development environment removes several bottlenecks that slow traditional optimization workflows:

- No handoff delays: Designers can build and ship changes without waiting for developer queues, reducing cycle time from weeks to days

- Component-based structure: Reusable elements and symbols let teams test variations across multiple pages simultaneously without rebuilding layouts

- Instant publishing: Changes go live in seconds, not after staging reviews, merge conflicts, or deployment windows

- Integrated CMS: Dynamic content updates happen without code changes, enabling rapid messaging tests across product pages, case studies, or blog templates

Faster iteration doesn't just mean more tests per quarter. It changes the types of questions teams can answer. When deployment takes hours instead of weeks, you can test granular hypotheses about microcopy, button placement, or form field order, changes that would never justify a multi-week project but collectively drive significant conversion lifts.

Consider a SaaS company testing checkout friction. On a traditional stack, each form field variation might require:

- Design mockup (2–3 days)

- Developer implementation (3–5 days)

- QA and staging review (2–3 days)

- Production deployment (1–2 days)

Total cycle time: 8–13 days per variant. In Webflow, the same test goes live in 1–2 days. Over a quarter, this difference means 6–8 tested hypotheses instead of 2–3. The learning rate compounds.

Velocity Creates Pattern Recognition

Higher iteration velocity doesn't just produce more data, it trains teams to recognize behavioral patterns faster. When you test weekly instead of monthly, you see how users respond to different value propositions, layout hierarchies, and friction points across contexts. This pattern recognition becomes predictive.

Teams with high velocity develop intuition about which hypotheses will succeed before running tests. They spot early signals in session recordings or heatmaps that indicate a test is working or failing. They build mental models of user behavior that reduce the cost of being wrong.

How Feedback Loops Turn Data Into Compounding Insight

A feedback loop connects test outcomes back to hypothesis generation, creating a self-improving system. Without this connection, optimization becomes a series of disconnected experiments that don't build on each other.

Effective feedback loops require three components:

- Structured documentation: Every test result includes the hypothesis, success metrics, qualitative observations, and whether the result was implemented, iterated, or discarded

- Regular review cadences: Weekly or bi-weekly sessions where teams discuss recent results, update mental models, and prioritize next hypotheses

- Accessible knowledge base: A shared repository (Notion, Confluence, or similar) where anyone can search past tests by topic, metric, or page

Answer Block 1: What is a CRO feedback loop?

A CRO feedback loop is a structured process where test results inform future hypotheses, creating a cycle of continuous improvement. It includes documentation of what was tested, why it succeeded or failed, and what behavioral patterns emerged. Teams use this accumulated knowledge to design better experiments over time.

For example, a team testing hero section messaging might discover that value propositions emphasizing speed outperform those emphasizing features. This insight informs future tests on pricing pages, email campaigns, and ad copy. Each test becomes input for the next decision.

Without feedback loops, teams repeat the same failed hypotheses across different pages or time periods. With loops, learning compounds. A SaaS company might start with 20% hypothesis success rates and reach 50–60% within 18 months as pattern recognition improves.

Qualitative Research as the Foundation for Quantitative Testing

Most teams start with A/B tests before understanding user behavior. This approach wastes time testing arbitrary variations. Strong optimization systems begin with qualitative research that identifies real friction points, then validate solutions quantitatively.

Qualitative methods include:

- Session recordings: Watching real users navigate pages to identify confusion, hesitation, or unexpected behavior

- User interviews: Direct conversations about decision-making processes, hesitations, and what information users need at each stage

- Form analytics: Field-level data showing where users abandon, which questions cause friction, or where errors occur

- Customer support patterns: Common questions or complaints that indicate gaps in messaging or clarity

When teams invest time in qualitative research first, their quantitative tests become more targeted. Instead of testing random headline variations, they test hypotheses grounded in observed user behavior. This raises success rates and reduces wasted testing on changes that don't address real problems.

From Observation to Hypothesis

The transition from qualitative insight to testable hypothesis follows a repeatable structure:

Observation → "Users scroll past the hero section without engaging with the CTA"

Behavioral inference → "The value proposition isn't immediately clear or compelling"

Testable hypothesis → "Clarifying the outcome users achieve (rather than listing features) will increase hero CTA clicks by 15–25%"

The hypothesis includes a mechanism (why this should work) and a predicted outcome (measurable success criteria). This structure forces teams to articulate assumptions before building variations. When tests fail, teams learn which assumptions were wrong, not just that the variation underperformed.

Teams operating conversion rate optimization as a system invest 30–40% of their optimization time in qualitative research. This ratio might seem high, but it dramatically improves the hit rate on quantitative tests. Spending two weeks on user interviews that identify five high-impact hypotheses beats spending two weeks testing random variations.

Design Iteration: How Small Changes Compound Into Large Lifts

Most teams focus on large redesigns or major feature changes. Systems-focused teams obsess over small iterations that reduce friction or clarify value at specific decision points. These micro-optimizations compound into macro results.

Consider a typical SaaS signup flow with four stages:

- Landing page hero → Demo request form

- Form submission → Confirmation page

- Confirmation → Calendar booking

- Calendar booking → Scheduled demo

Each stage has a conversion rate. A 5% improvement at each stage compounds:

- Stage 1: 10% → 10.5% (+5%)

- Stage 2: 60% → 63% (+5%)

- Stage 3: 40% → 42% (+5%)

- Stage 4: 70% → 73.5% (+5%)

Overall conversion: 1.68% → 2.04% (+21.4% end-to-end)

This compounding effect explains why continuous systems outperform periodic campaigns. Small, validated improvements at each decision point create exponential gains across the entire funnel.

Answer Block 2: How do small CRO changes create large business impact?

Small conversion improvements compound when applied across multiple funnel stages. A 5% lift at four sequential steps doesn't add linearly, it multiplies, creating 20%+ overall gains. Teams that iterate continuously across every touchpoint see larger business impact than teams making occasional big changes.

This approach requires identifying every decision point where users might hesitate or abandon. Then systematically testing hypotheses that reduce friction or clarify value at each point. Over quarters, these accumulated small wins produce results that exceed single large redesigns.

Webflow's component structure makes this iteration model practical. Changes to reusable elements propagate across pages instantly, letting teams test improvements broadly without rebuilding layouts.

Infrastructure Requirements for Continuous Optimization

Building a sustainable conversion rate optimization system requires specific infrastructure beyond testing tools:

- Analytics stack: GA4 or similar with event tracking on every meaningful interaction (button clicks, scroll depth, form field engagement)

- Session recording tool: Hotjar, FullStory, or Clarity to observe real user behavior and identify friction points

- Heatmap analysis: Understanding where attention goes and where users drop off before taking action

- Documentation system: Notion, Confluence, or Airtable to track hypotheses, results, and learnings in a searchable format

- Collaboration cadence: Weekly 30-minute reviews where cross-functional teams discuss recent tests and prioritize next experiments

Many teams have these tools but don't use them systematically. The difference between having infrastructure and operating a system is discipline: scheduled reviews, documented outcomes, and accountability for acting on insights.

Broworks builds this infrastructure into client projects from the start, creating optimization-ready sites where tracking, testing, and iteration are native capabilities, not retrofitted afterthoughts.

Common Mistakes Teams Make When Starting CRO Systems

Mistake 1: Testing Without Clear Hypotheses

Running A/B tests to "see what happens" wastes time and produces inconclusive results. Every test should articulate:

- What is being changed

- Why this change should improve conversion

- What user behavior or friction point this addresses

- What metric will measure success

Without these elements, teams can't learn from failures. A test that underperforms isn't a waste if you understand why it failed. That becomes input for better hypotheses.

Mistake 2: Stopping Tests Too Early

Statistical significance requires adequate sample size and time. Stopping a test after 100 conversions or one week of traffic produces unreliable results. Most meaningful tests need:

- Minimum 250–500 conversions per variant

- At least 2 full weeks to account for weekly behavioral patterns

- Consistent traffic sources (don't mix paid and organic heavily mid-test)

Teams eager for quick wins often declare victory prematurely, implement changes that don't hold up long-term, and lose trust in optimization as a practice.

Mistake 3: Ignoring Segmentation

Aggregate conversion rates hide important behavioral differences. A homepage redesign might improve conversion for new visitors while hurting returning users who knew the old navigation. Without segmentation, you miss this nuance.

Effective optimization systems analyze results by:

- Traffic source (organic, paid, referral, direct)

- Device type (mobile, tablet, desktop)

- User stage (new visitor, returning, customer)

- Geographic location (especially for international audiences)

Segment-level insights often reveal why a test succeeded or failed, providing richer learning than top-line metrics alone.

Mistake 4: Building Tests That Take Weeks to Deploy

If testing velocity is low, teams can't build feedback loops. The time between hypothesis and validated result becomes so long that organizational priorities shift, stakeholders lose interest, or market conditions change.

According to Webflow's The 2026 State of the Website report, teams using the platform typically reduce deployment time by 60–80% compared to traditional development workflows. This velocity advantage only materializes if teams use it, building lightweight tests, shipping frequently, and iterating based on data.

How to Implement a CRO System in Stages

Stage 1: Establish Baseline Measurement (Weeks 1–2)

Before testing anything, teams need accurate baseline metrics:

- Current conversion rates for key pages (homepage, pricing, demo requests, signup)

- Traffic volume and sources for each page

- Existing user behavior patterns from session recordings

- Common friction points identified through support tickets or user feedback

This baseline becomes the benchmark for measuring improvement. Teams skipping this step don't know if changes are working because they don't know where they started.

Stage 2: Build Hypothesis Backlog (Weeks 3–4)

Create a prioritized list of 15–20 testable hypotheses using qualitative research:

- Watch 30–50 session recordings across key pages

- Conduct 5–10 user interviews about decision-making process

- Analyze form field completion rates and abandonment points

- Review customer support patterns for common questions or confusion

For each hypothesis, document:

- Which page or element it targets

- What behavioral observation it addresses

- What change is being tested

- Predicted impact and success metric

Prioritize based on traffic volume, ease of implementation, and potential impact. High-traffic pages with clear friction points should be tested first.

Stage 3: Run First 3–5 Tests (Weeks 5–10)

Start with simpler hypotheses to build team confidence and establish workflow:

- Headline or value proposition changes on high-traffic pages

- CTA button copy or placement adjustments

- Form field reductions or microcopy additions

Run tests sequentially, not simultaneously, unless pages have completely separate audiences. This ensures clean attribution and avoids interaction effects between tests.

Document results immediately after each test concludes: what worked, what didn't, and what behavioral patterns emerged. Share findings with the broader team to build organizational understanding of optimization as a practice.

Stage 4: Establish Review Cadence (Week 11+)

Once initial tests are complete, implement weekly or bi-weekly optimization reviews:

- Attendees: Design, development, marketing, and analytics stakeholders

- Agenda: Review recent test results, discuss patterns, prioritize next hypotheses

- Duration: 30–45 minutes, time-boxed to maintain focus

- Output: Updated hypothesis backlog with next 2–3 tests approved for build

This cadence turns optimization from a project into an operating rhythm. Teams discuss results while they're fresh, apply learnings to adjacent pages, and maintain momentum even when individual tests fail.

Stage 5: Scale Testing Across Funnels (Quarter 2+)

After establishing basic infrastructure and workflow, expand testing to cover:

- Post-conversion experiences (onboarding, activation, retention)

- Content pages (blog, resources, case studies)

- Paid landing pages and campaign-specific flows

- Email sequences and nurture campaigns

Each new area tested feeds the overall learning system. Patterns discovered in one funnel often apply to others, accelerating optimization across the entire customer journey.

Why Most Organizations Never Build This System

The primary barrier isn't technical, it's organizational. Continuous optimization requires:

- Executive buy-in: Leadership that understands optimization as strategic, not tactical

- Protected time: Team members with dedicated hours for research, testing, and analysis

- Cross-functional collaboration: Regular interaction between design, development, marketing, and analytics

- Tolerance for failure: Acceptance that 50–60% of tests won't produce lifts, and that's productive learning

Many organizations want the results of continuous optimization without the operational commitment. They run occasional tests when time permits, don't document learnings, and wonder why improvement plateaus after initial gains.

Building a system requires treating optimization as core work, not a nice-to-have. It means hiring or developing team members with research and experimentation skills. It means investing in infrastructure that makes testing fast and documentation easy.

Teams that make this investment see compounding returns. Those that don't wonder why their conversion rates haven't improved in years despite running "lots of tests."

.svg)

.svg)