Sitemap vs Robot.txt vs Llms.txt: Which is More Important

Confusion around robots.txt, sitemaps, and the newly introduced llms.txt has created a false sense of equivalence between tools that actually serve very different purposes. The real insight is that only robots.txt and sitemaps influence how search engines crawl and index content, while llms.txt provides no measurable impact and is not read by Google at all. Treating llms.txt as a control layer leads to misplaced expectations and overlooked technical fundamentals. The practical shift is refocusing on proven crawl directives and clean discovery architecture to protect content and strengthen visibility, rather than relying on unsupported experimental files.

There’s growing confusion around robots.txt vs llms.txt and how these files interact with your sitemap. Each serves a unique purpose in the SEO ecosystem, but not all are equally valuable. While robots.txt and sitemaps remain foundational for search visibility and crawling efficiency, llms.txt - despite its recent attention, has proven to be more hype than help.

Understanding what each file does and how they relate to one another can make or break your SEO performance. Let’s break it down.

The purpose of robots.txt and why it still matters

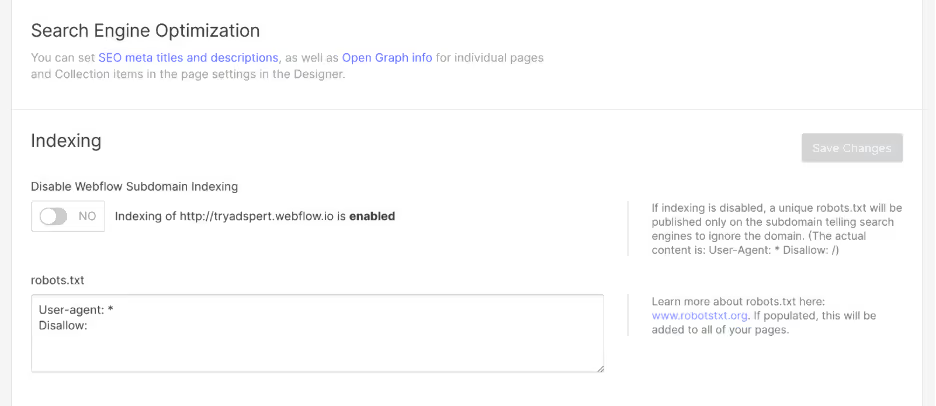

The robots.txt file is one of the oldest and most essential tools in technical SEO. It tells search engine crawlers which areas of your site should be indexed and which should remain private. A well-configured robots.txt file helps you control crawl budget, avoid duplicate content, and ensure that sensitive directories or scripts are not indexed.

Best practices for robots.txt include:

- Allow essential content and block unnecessary files like admin pages, internal search results, or temporary assets.

- Include a link to your sitemap to help search engines discover all indexable pages quickly.

- Test your file using Google Search Console’s robots.txt tester to prevent accidental blocking.

- Update the file regularly as your site structure evolves.

For Webflow users, optimizing robots.txt is easy because you can manage it directly within the platform. Linking to your sitemap from robots.txt helps both Google and Bing understand your site hierarchy faster.

You can learn more about optimizing your Webflow site structure through our Webflow SEO agency services.

Understanding sitemaps and their advantages

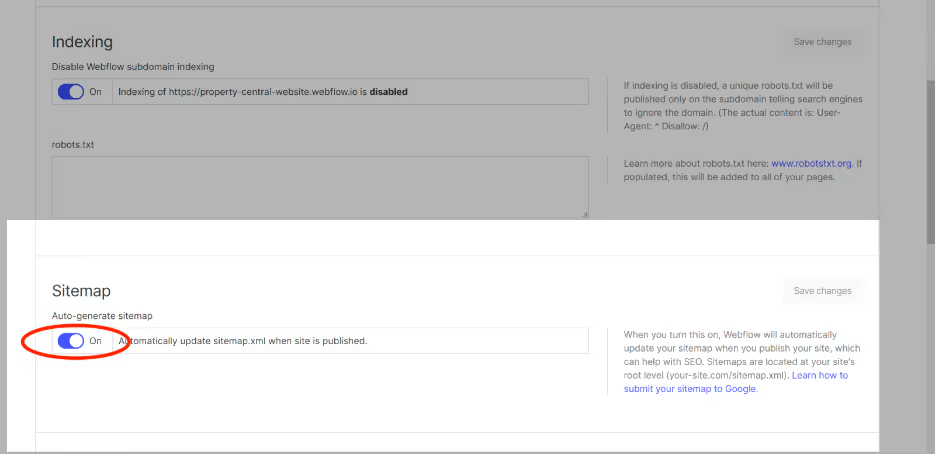

A sitemap is a structured file that lists all the URLs on your website, helping search engines find and crawl your pages efficiently. There are two main types of sitemaps:

XML sitemaps - Primarily for search engines, providing metadata such as update frequency and priority. These are crucial for large websites with many pages or complex navigation.

HTML sitemaps - Designed for users, offering a human-readable overview of your site structure. While not mandatory, they can improve user experience and internal linking.

Both types work hand-in-hand with your robots.txt file. When properly implemented, they ensure no valuable content remains undiscovered. In Webflow, generating and maintaining XML sitemaps can be automated, while creating an HTML sitemap can enhance navigation and accessibility.

Where llms.txt fits into the picture

As conversations about AI content usage evolve, llms.txt emerged as a proposed way for publishers to express how their material should be handled by large language models. Conceptually, it was intended to act like a preferences file, mirroring the way sitemaps and robots.txt communicate with search engines. However, its practical impact remains nonexistent. There are no technical standards governing how AI crawlers should interpret it, and major platforms have not adopted it. Google has publicly stated that it does not read or rely on llms.txt at all, leaving the file with no influence over access control, content usage, or attribution.

Robots.txt vs llms.txt: the new debate

The robots.txt vs llms.txt debate emerged when the proposed llms.txt file entered the conversation. Introduced as a potential standard for controlling how large language models (LLMs) access website content, llms.txt was meant to provide a method for webmasters to set permissions for AI crawlers.

However, according to Google’s own documentation and statements (to add source), the llms.txt file has little to no practical impact. Google clarified that it does not currently read or follow llms.txt directives, making it more symbolic than functional. Even reports from Search Engine Land confirm that it’s not an official or recognized web standard.

Why llms.txt isn’t useful yet

While the idea behind llms.txt - giving webmasters control over how AI models use their content, is interesting, it lacks enforcement and adoption. There are no formal guidelines or technical frameworks for AI crawlers to adhere to it. Additionally, most AI systems already rely on existing web crawlers that respect robots.txt rules.

The absence of attribution is another drawback. Even if llms.txt were to limit data scraping, it would not solve the challenge of AI-generated content lacking proper credit to original creators. This leaves publishers exposed to the ongoing issue of content reuse without recognition or value exchange.

Which file is most important?

In terms of impact on SEO, robots.txt and XML sitemaps remain far superior to llms.txt. They directly influence how search engines discover, crawl, and rank your website.

Here’s a simple hierarchy to remember:

- Robots.txt controls crawler access.

- Sitemap.xml helps search engines find content efficiently.

- Llms.txt currently adds no measurable SEO benefit. Google has explicitly stated that the llms.txt concept provides no value for crawling, indexing, or AI training control, and it has zero influence on search rankings or dataset inclusion. As a result, the file offers no practical control, no attribution benefit, and no technical advantage compared to established standards like robots.txt.

So, if you want your website to perform well in organic search, prioritize maintaining a clean robots.txt file and up-to-date sitemap.

Final thoughts

When comparing sitemap vs robots.txt vs llms.txt, the conclusion is clear: focus your efforts on the essentials that move the SEO needle. Llms.txt may become useful in the future if web standards evolve, but for now, it holds no practical role in boosting your visibility or protecting your content.

A properly configured robots.txt file and accurate sitemaps will always deliver measurable value. They keep your site crawl-efficient, ensure discoverability, and provide structure that search engines rely on.

In short, when it comes to robots.txt vs llms.txt, the smart choice is to invest your energy where it counts, on tools that search engines actually use.

.svg)

.svg)