How Top Webflow Design Agencies Prepare Websites for Growth through A/B Testing

Leading Webflow design agencies transform websites into experimentation platforms by embedding A/B testing infrastructure during initial development rather than retrofitting it later. This approach involves modular component architecture that enables surgical element swapping, semantic organization that makes variant creation intuitive, and integrated testing platforms that remove technical bottlenecks between hypothesis and validation. Strategic implementation focuses on high-impact funnel stages, homepage hero optimization first, then form conversion, followed by pricing and feature pages. Organizations that prioritize experimentation-ready builds achieve 20-30% higher conversion rates within 12 months compared to those without systematic testing capabilities. The infrastructure investment adds approximately 15% to initial project timelines but delivers positive ROI within the first quarter through compound conversion improvements that persist as teams iterate continuously.

How Top Webflow Design Agencies Prepare Websites for Growth through A/B Testing

The gap between high-traffic websites and high-converting websites often comes down to one practice: systematic experimentation. Top Webflow design agencies understand this fundamental truth and embed A/B testing capabilities directly into their builds from day one. Rather than treating experimentation as an afterthought or requiring engineering resources for every test, leading agencies create flexible, CRO-ready systems that empower marketing teams to validate hypotheses, optimize conversion paths, and drive measurable growth without technical dependencies.

When a marketing director at a Series B SaaS company needs to test three different hero messaging approaches, they shouldn't need to file a developer ticket and wait two weeks. When a CMO wants to understand whether a single-column or two-column pricing layout converts better, the answer shouldn't require a complete site rebuild. Top Webflow design agencies solve these bottlenecks by architecting experimentation frameworks that balance flexibility with governance, enabling rapid iteration while maintaining brand consistency and technical integrity.

Understanding the Strategic Value of Experimentation-Ready Webflow Builds

Before diving into implementation specifics, it's essential to understand why top Webflow design agencies prioritize A/B testing infrastructure. Traditional website development often treats testing as a bolt-on feature, something added months after launch when traffic reaches a certain threshold. This approach creates several critical problems.

First, retrofitting testing capabilities into existing websites introduces technical debt. When designers build page layouts without considering variant creation, the resulting structure resists modification. Static layouts become rigid constraints, and seemingly simple tests require wholesale template rebuilds. This is why many organizations choose to migrate from WordPress to Webflow as part of their CRO transformation, starting fresh with experimentation-ready infrastructure proves more efficient than retrofitting legacy systems. Second, delayed testing means missed optimization opportunities. Every day a suboptimal conversion path remains in production represents lost revenue and wasted acquisition spend. Third, technical dependencies create organizational friction. When every test requires developer involvement, marketing teams lose agility, experimentation velocity drops, and data-driven decision making suffers.

Leading Webflow design agencies flip this paradigm entirely. They architect websites as experimentation platforms from the initial wireframe stage. Rather than asking "how should this page look?" they ask "what hypotheses will this team want to test, and how do we enable that testing without creating bottlenecks?" This shift in perspective transforms websites from static publishing tools into dynamic growth engines.

The strategic advantages compound over time. Organizations with experimentation-ready infrastructure test more frequently, learn faster, and optimize more aggressively. According to research from Conversion Rate Experts, companies that run systematic testing programs achieve 20-30% higher conversion rates within 12 months compared to those that don't. The gap widens further when measuring long-term outcomes. After three years, organizations with mature testing cultures consistently outperform competitors by 2-3x on key conversion metrics.

Understanding the Strategic Value of Experimentation-Ready Webflow Builds

Before diving into implementation specifics, it's essential to understand why top Webflow design agencies prioritize A/B testing infrastructure. Traditional website development often treats testing as a bolt-on feature, something added months after launch when traffic reaches a certain threshold. This approach creates several critical problems.

First, retrofitting testing capabilities into existing websites introduces technical debt. When designers build page layouts without considering variant creation, the resulting structure resists modification. Static layouts become rigid constraints, and seemingly simple tests require wholesale template rebuilds. This is why many organizations choose to migrate from WordPress to Webflow as part of their CRO transformation, starting fresh with experimentation-ready infrastructure proves more efficient than retrofitting legacy systems. Second, delayed testing means missed optimization opportunities. Every day a suboptimal conversion path remains in production represents lost revenue and wasted acquisition spend. Third, technical dependencies create organizational friction. When every test requires developer involvement, marketing teams lose agility, experimentation velocity drops, and data-driven decision making suffers.

Leading Webflow design agencies flip this paradigm entirely. They architect websites as experimentation platforms from the initial wireframe stage. Rather than asking "how should this page look?" they ask "what hypotheses will this team want to test, and how do we enable that testing without creating bottlenecks?" This shift in perspective transforms websites from static publishing tools into dynamic growth engines.

The strategic advantages compound over time. Organizations with experimentation-ready infrastructure test more frequently, learn faster, and optimize more aggressively. According to research from Conversion Rate Experts, companies that run systematic testing programs achieve 20-30% higher conversion rates within 12 months compared to those that don't. The gap widens further when measuring long-term outcomes. After three years, organizations with mature testing cultures consistently outperform competitors by 2-3x on key conversion metrics.

Core Principles: How Top Agencies Structure CRO-Ready Webflow Projects

Top Webflow design agencies approach experimentation infrastructure through several foundational principles. These aren't superficial implementation details but strategic decisions that determine whether a website enables or inhibits growth.

Modular Component Architecture

The first principle centers on modularity. Instead of building page templates as monolithic blocks, leading agencies decompose layouts into discrete, reusable components. Each component represents an independent element that can be tested, modified, or replaced without affecting surrounding content.

Consider a typical SaaS homepage. Rather than treating the hero section as a single entity, top agencies break it into atomic components: headline module, subheadline module, CTA button module, hero image module, and social proof module. This granular structure enables surgical testing. Want to test headline variations? Swap the headline component. Need to evaluate different CTA button copy? Replace just the button module. Testing whether testimonial placement in the hero improves trust signals? Insert or remove the social proof component without touching headline or button code.

This component-based approach delivers multiple benefits. First, it reduces test implementation time from days to minutes. Marketing teams can assemble variants by combining components rather than redesigning entire sections. Second, it improves test quality. When changes are isolated, results become easier to interpret. Third, it creates a testing library over time. Winning components become standardized elements that improve future projects.

Semantic Naming Conventions and Organization

The second principle addresses how content gets organized within Webflow's CMS and style system. Top agencies implement rigorous naming conventions that make variant creation intuitive and reduce errors.

Every collection, field, class, and component follows a predictable taxonomy. Classes use BEM (Block Element Modifier) methodology or similar systems that communicate purpose and hierarchy at a glance. Collections include version fields, variant fields, and status flags that enable conditional display logic. Field names describe content purpose rather than visual presentation, "value proposition" instead of "large text," "conversion goal statement" instead of "blue headline."

The semantic precision serves multiple purposes in testing contexts. First, it makes variant creation self-documenting. A new team member can understand page structure without consulting documentation. Second, it reduces implementation errors. When class names clearly indicate purpose, designers are less likely to apply incorrect styles or break responsive behavior. Third, it enables advanced testing patterns. Semantic structure makes it straightforward to implement personalization, progressive disclosure, and other sophisticated optimization techniques. This semantic approach also supports LLM and AI search visibility, ensuring content remains discoverable as search evolves beyond traditional SEO.

Style System Flexibility Without Chaos

The third principle balances visual consistency with experimental freedom. Top Webflow design agencies build style systems that support rapid variant creation while preventing brand drift and design inconsistencies.

This balance manifests through several specific practices. Agencies establish a core design system with locked base styles, typography scales, color palettes, spacing systems, and grid structures that define brand identity. These elements remain consistent across all tests. Within this foundation, agencies create "variation zones" where experimental styles are permitted. Variation classes inherit core styles but add or override specific properties relevant to testing.

For example, a button system might include a base button class that defines size, spacing, and animation behavior. Variant classes then modify only color, border, or icon properties. This approach ensures buttons remain functionally consistent (same hit area, same interaction pattern) while allowing visual experimentation. When a variant wins, promoting it to the core system requires updating a single class rather than finding and replacing multiple instances across the site.

The style system also incorporates utility classes specifically for testing scenarios. Spacing utilities enable quick layout adjustments. Display utilities support showing and hiding elements based on test conditions. These utilities operate within the established design system constraints, maintaining visual coherence while accelerating test implementation.

Technical Implementation: Building Experimentation Frameworks in Webflow

Moving from principles to practice, top Webflow design agencies employ specific technical strategies to embed testing capabilities into websites. These implementations vary based on project scope, team technical sophistication, and testing volume, but several patterns emerge consistently.

Integration Architecture: Connecting Testing Tools with Webflow

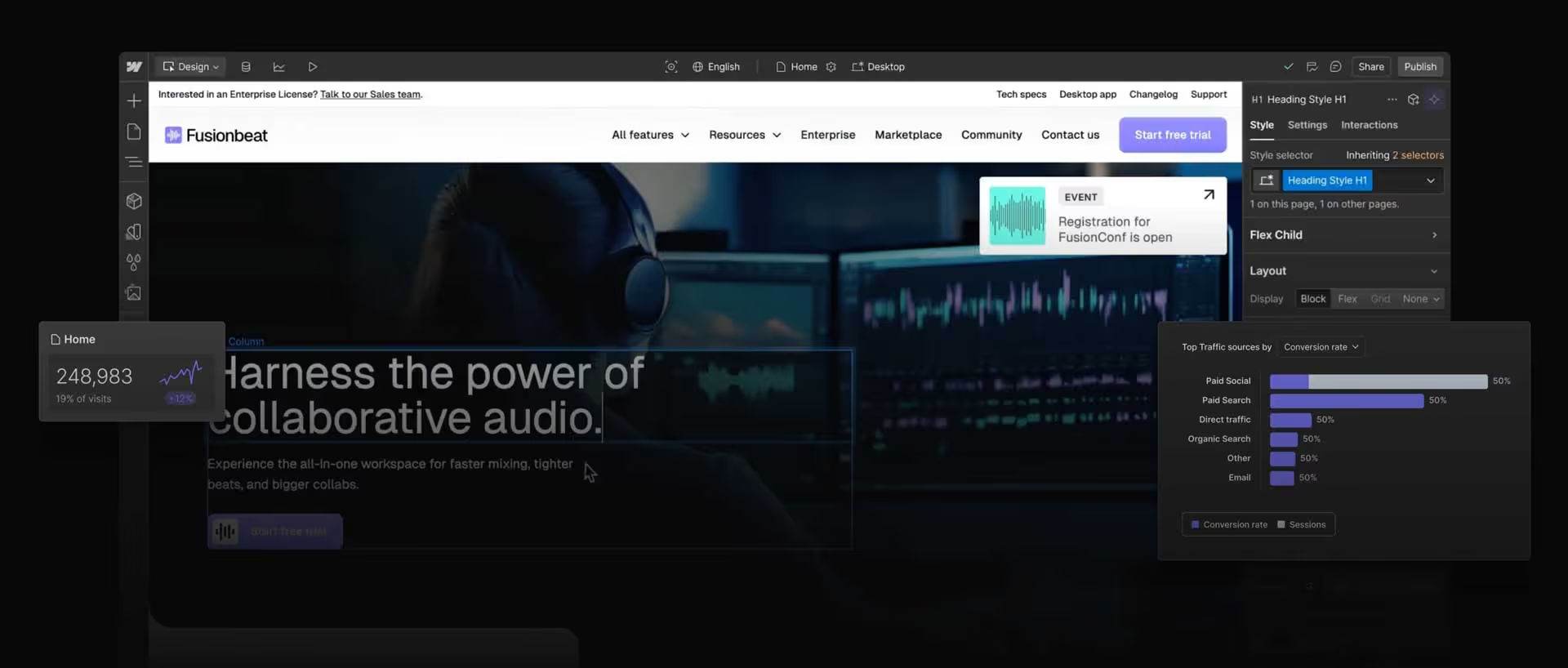

Most experimentation frameworks begin with selecting and integrating a testing platform. While Webflow doesn't include native A/B testing, it integrates smoothly with enterprise testing solutions and specialized CRO tools.

Top agencies typically recommend Google Optimize (for teams already using Google Analytics and requiring a free solution), VWO (for teams wanting visual editors and heatmap integration), Optimizely (for enterprise organizations with complex personalization needs), or Webflow-specific solutions like Optibase that understand Webflow's structure natively.

The integration process follows a standard pattern regardless of platform. First, agencies add the testing tool's JavaScript snippet to the site's custom code section in Webflow's project settings. This snippet typically goes in the head tag to ensure it loads before page rendering, preventing flash of original content (FOOC) issues. Second, agencies configure trigger conditions and targeting rules within the testing platform. Third, they establish workflows for variant creation, QA, and launch approval.

The key architectural decision involves whether to build variants within Webflow or within the testing tool itself. Top agencies favor a hybrid approach. Simple tests, headline copy, button color, image selection, run entirely within the testing platform using its visual editor or JavaScript modifications. This enables rapid implementation without touching Webflow. Complex tests, layout restructures, component additions, full page redesigns, get built as alternate Webflow CMS items or pages. The testing tool then controls which version displays, but the actual code lives in Webflow.

This hybrid strategy balances speed and quality. Trivial tests don't require Webflow access, enabling high test velocity. Significant tests maintain design system integrity and responsive behavior because they're built properly in Webflow. The division of labor also creates clear ownership. Marketing teams can run simple tests independently, while designers handle complex structural changes.

Conditional Display Logic and Variant Systems

Beyond external testing tools, top agencies build conditional logic directly into Webflow projects. This approach works particularly well for personalization, staged rollouts, and internal previews before formal testing.

The implementation centers on Webflow's conditional visibility and CMS filtering capabilities. Agencies create variant fields in CMS collections, then use conditional logic to display specific variants based on URL parameters, cookies, or Collection field values. For example, a blog post collection might include a "headline_variant" field with options like "default," "data_focused," and "emotion_focused." Collection pages then display different headline versions depending on this field value, and URL parameters control which variant renders.

This pattern extends to more sophisticated scenarios. Some agencies build complete multi-variant page systems within single Webflow pages. A homepage might include three hero sections, three feature layouts, and two CTA approaches,all present in the page structure but conditionally visible based on combo classes or custom attributes. JavaScript snippets read cookies or URL parameters, then toggle visibility by adding or removing classes. External testing tools detect the parameter values and assign visitors to test groups, completing the loop.

The advantage of this approach is complete control and immediate results. Designers can preview all variants without waiting for test implementation. QA becomes straightforward—visit the page with different parameters to verify each variant renders correctly. Test setup reduces to configuring the testing tool's traffic allocation and goal tracking, since variants already exist in production.

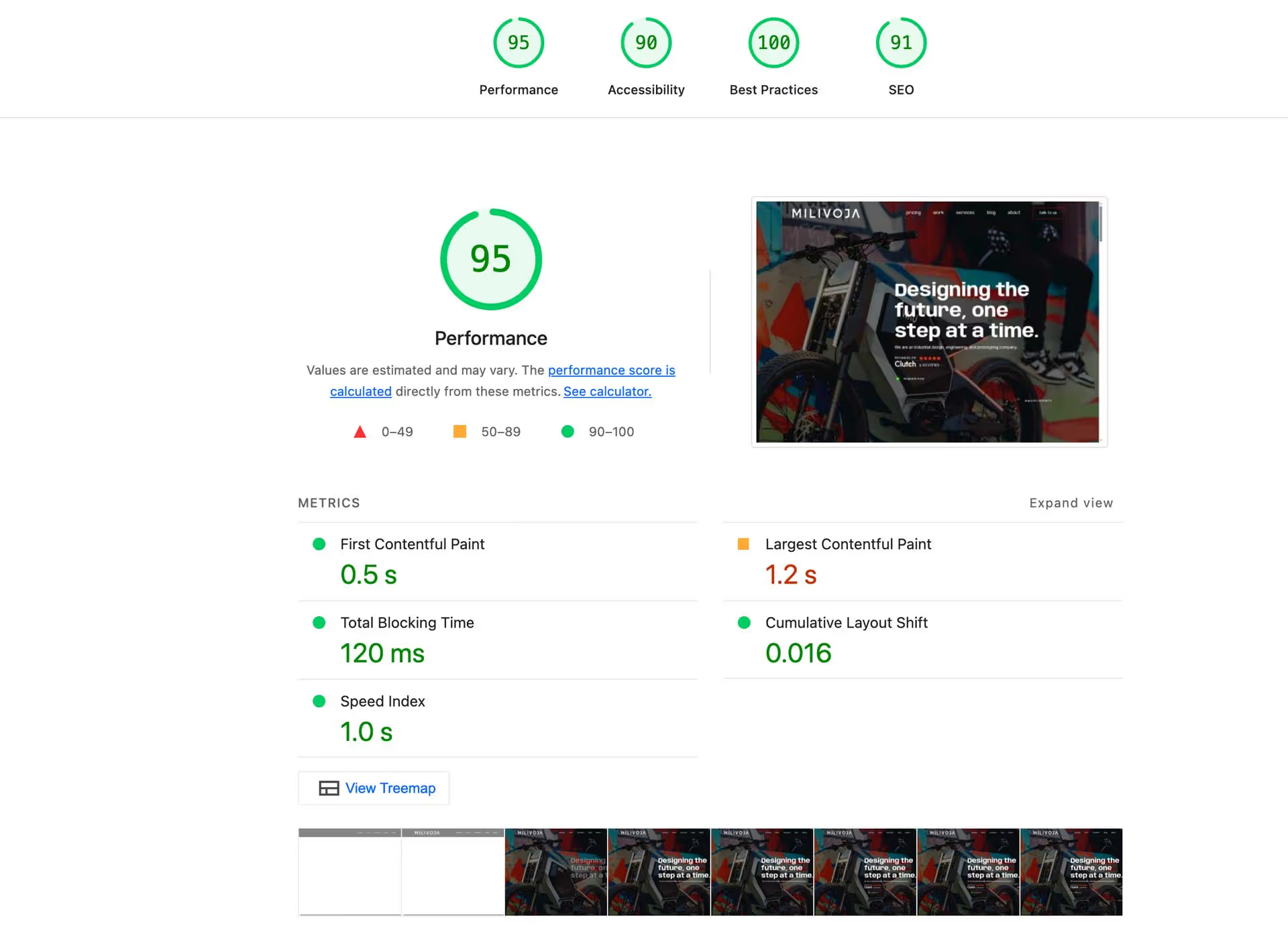

Performance Optimization for Tested Pages

A critical but often overlooked aspect of experimentation frameworks involves performance optimization. Poorly implemented testing can degrade page speed, which itself influences conversion rates and creates confounding variables in test results.

Top Webflow design agencies address this through several specific practices. First, they minimize JavaScript dependencies. Rather than loading multiple testing tools simultaneously, agencies consolidate through tag managers or select single platforms. Second, they optimize variant code. Even if five variants exist on a page, only one renders for each visitor. Agencies ensure unused variants don't download images or execute scripts, typically through lazy loading and conditional script execution. Third, they front-load critical rendering paths. Testing snippets use asynchronous loading patterns that don't block initial page render.

Some agencies implement more advanced patterns like edge-side rendering for tests. By detecting test assignment at the CDN layer, they can serve fully-rendered variants without client-side JavaScript execution. This approach requires more sophisticated infrastructure but delivers the fastest possible performance for tested pages.

Practical Testing Patterns: What Top Agencies Actually Test

Understanding the infrastructure matters, but knowing what to test determines success. Top Webflow design agencies bring domain expertise about which tests drive meaningful results across different industries and growth stages.

Homepage and Landing Page Optimization

For SaaS and B2B companies, the homepage serves multiple audiences simultaneously, new visitors evaluating the product, existing users seeking support, investors assessing company credibility. Top agencies structure homepage tests around these distinct personas.

Common high-impact tests include hero headline variations that emphasize different value propositions (speed vs. security, ROI vs. ease of use), social proof placement and format (customer logos vs. testimonials vs. usage statistics), CTA copy and positioning (try free vs. get demo vs. see pricing), and feature presentation structure (feature grid vs. benefit-focused narrative vs. use case examples).

Leading agencies avoid testing too many elements simultaneously. Instead, they sequence tests strategically. Start with the hero section, which receives the most attention and influences bounce rates. Once a winning hero approach emerges, move to feature presentation. After optimizing the primary conversion path, test secondary elements like footer CTAs and exit intent modals.

Conversion Path and Form Optimization

For lead generation and SaaS free trial conversions, form optimization generates some of the highest ROI tests. Top agencies approach form testing systematically rather than randomly changing field counts or button colors.

The optimization sequence typically begins with form length testing. Does a two-field email capture outperform a five-field qualification form? The answer depends on sales team efficiency and lead quality requirements. Next, agencies test field order and labeling. Progressive disclosure patterns (showing fields sequentially) often outperform all-at-once presentation. Field labels that communicate value ("Get my custom plan" instead of "Email address") can lift conversion rates 10-20%.

Form placement testing comes next. Some audiences prefer inline forms on the homepage. Others respond better to dedicated landing pages. Still others convert most readily through multi-step flows that gather information progressively. Top agencies test all three patterns, measuring not just form completion rates but downstream metrics like activation, retention, and revenue per lead.

Advanced form tests incorporate dynamic logic based on user inputs. If someone selects "enterprise" as company size, the form might request additional fields relevant to enterprise sales. If they select "startup," it might skip directly to trial activation. This conditional logic reduces friction for simple use cases while gathering necessary information for complex sales.

Pricing Page and Packaging Tests

Pricing page optimization represents one of the highest-stakes testing opportunities. Small changes in plan positioning, feature attribution, or pricing display can influence revenue by millions of dollars annually.

Top agencies approach pricing tests with appropriate caution. Rather than testing radical price changes in production, they typically test pricing communication and packaging. This includes plan order (cheapest first vs. most popular first), feature organization (grouped by category vs. listed by plan), visual emphasis techniques (highlighting recommended plans vs. neutral presentation), and pricing display format (monthly vs. annual vs. toggle between both).

Some of the most impactful pricing tests involve plan names and value propositions. Generic names like "Professional" and "Enterprise" communicate less than outcome-focused alternatives like "Growth" and "Scale." Feature lists that emphasize benefits ("Unlimited API calls to power your application") outperform technical specifications ("API rate limit: unlimited").

Agencies also test pricing page supplementary elements, FAQ sections that address objections, comparison tables that highlight differences between plans, calculator tools that help buyers estimate ROI, and case studies that demonstrate plan value. These supporting elements often influence conversion as much as the pricing structure itself.

Advanced Patterns: Multi-Channel and Sequential Testing

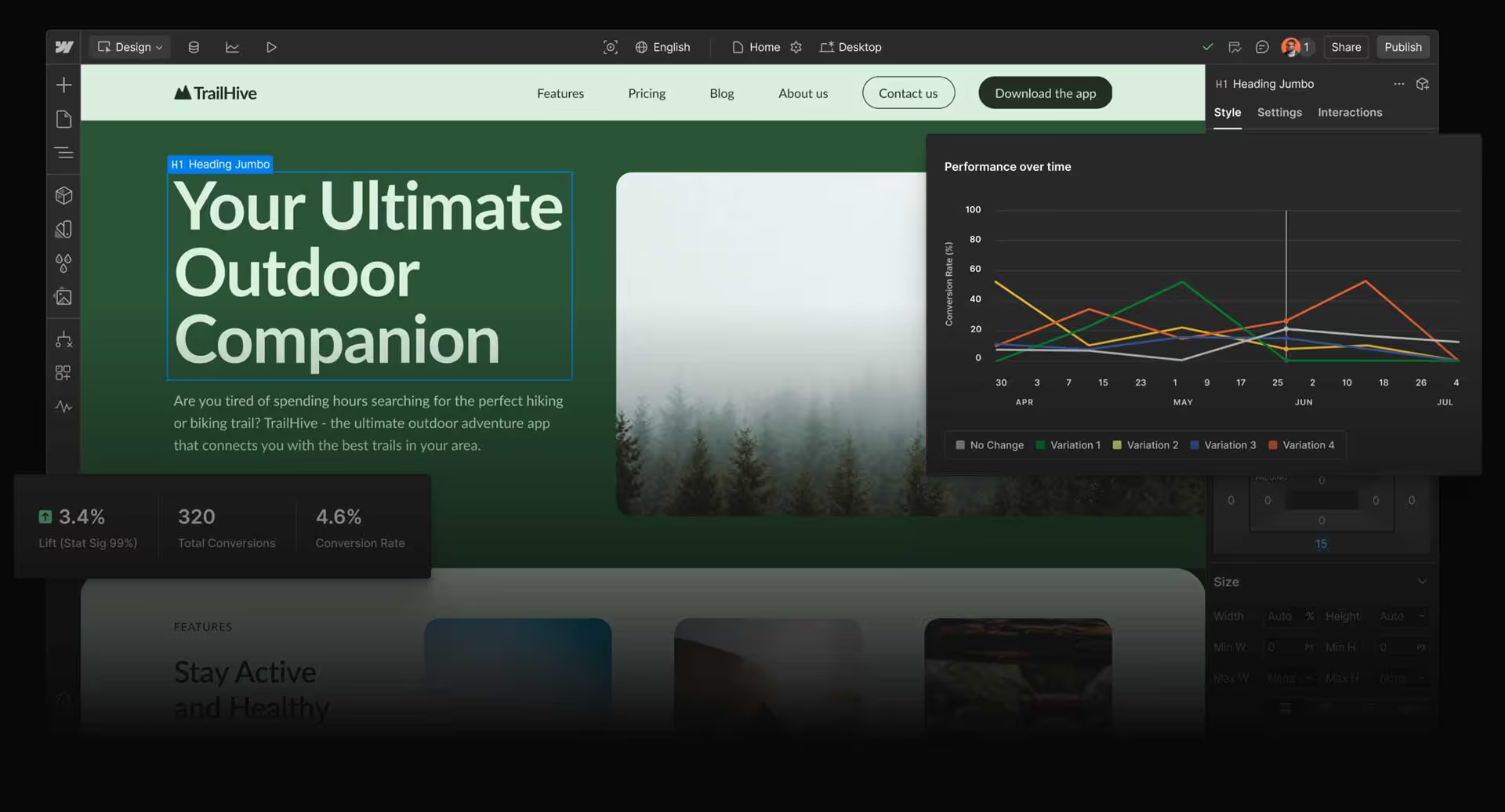

Beyond individual page tests, top Webflow design agencies implement sophisticated multi-channel testing strategies that optimize entire conversion funnels.

Cross-Page Funnel Optimization

Rather than optimizing pages in isolation, leading agencies map complete user journeys and test funnel-level hypotheses. A typical SaaS funnel might include paid ad → landing page → feature page → pricing page → signup form → onboarding. Each step represents a testing opportunity, but the interdependencies matter.

For example, an aggressive landing page headline that promises "10x ROI in 30 days" might increase landing page conversions but decrease signup completion if the product can't deliver that promise. Funnel testing identifies these disconnects. Agencies implement consistent tracking across all funnel steps, measure drop-off points, and test coordinated changes that improve end-to-end conversion even if individual page metrics remain flat.

Some agencies build sophisticated attribution models that weight test results by downstream value. A pricing page variant that generates 20% more trial signups but 30% fewer paying customers ultimately hurts revenue. By tracking cohorts from test exposure through revenue events, agencies identify variants that improve true business outcomes rather than vanity metrics.

Personalization and Dynamic Content

The most advanced experimentation frameworks blur the line between A/B testing and personalization. Rather than serving identical experiences to all visitors, top agencies implement dynamic content that adapts based on visitor characteristics, behavior, and context.

Personalization starts simple, displaying different CTAs for first-time vs. returning visitors, showing industry-specific examples based on referral source, or emphasizing different value propositions for visitors from different geographic regions. As sophistication increases, agencies implement predictive personalization that uses machine learning to determine which content variations each visitor most likely responds to.

Webflow's CMS structure supports these patterns well. Agencies create content variants for different segments, then use conditional logic and testing tools to serve appropriate variants. The key infrastructure requirement involves tracking visitor attributes and making them available to Webflow's rendering layer. This typically requires JavaScript that reads cookies, URL parameters, or localStorage values, then passes them to Webflow's conditional visibility system.

Building Internal Capabilities: Training Teams for Testing Independence

Technical infrastructure alone doesn't create experimentation culture. Top Webflow design agencies invest significant effort in training client teams to run tests independently, ensuring optimization continues after project handoff.

Documentation and Playbooks

Agencies provide comprehensive documentation that covers both technical implementation and strategic framework. Technical documentation includes step-by-step guides for common testing scenarios (adding a headline variant, creating a new landing page, swapping out hero images), troubleshooting guides for typical issues, and reference materials for conditional visibility rules and CMS filtering.

Strategic documentation addresses what to test, when to test it, and how to interpret results. This includes testing prioritization frameworks (ICE scoring, PIE scoring), hypothesis templates that enforce rigorous thinking, sample size calculators that prevent premature conclusions, and statistical significance thresholds appropriate for the team's traffic levels.

The best documentation includes video walkthroughs and real examples from past tests. Rather than abstract descriptions, teams see exactly how previous tests were implemented and what results emerged. This concrete guidance accelerates learning and builds confidence. For additional frameworks and implementation guides, organizations can reference comprehensive Webflow resources that cover testing strategy, CRO best practices, and optimization case studies.

Governance Without Bottlenecks

A common challenge involves balancing testing velocity with quality control. Marketing teams want to iterate quickly, but unrestricted testing can damage brand consistency, introduce bugs, or generate conflicting results from overlapping tests.

Top agencies solve this through governance systems that enforce standards without creating approval bottlenecks. Typical approaches include tiered authorization (marketing team members can run simple tests independently, complex structural changes require design review), automated validation (style system checks that prevent brand guideline violations), and capacity planning (test calendars that prevent overlap and ensure clean result interpretation).

Some agencies implement "sandbox" environments where teams can experiment freely before promoting tests to production. Webflow's staging environments enable this pattern. Teams can build and QA multiple variants in staging, then push winning variants to production with confidence.

Measurement and Optimization: Turning Data into Growth

Testing infrastructure only creates value when combined with rigorous measurement and analysis. Top agencies establish comprehensive analytics frameworks that track not just test results but overall site health.

Metric Selection and Goal Tracking

Different tests require different success metrics. Homepage hero tests might optimize for bounce rate reduction and scroll depth. Landing page tests focus on form conversion. Pricing page tests measure trial sign-ups and paid conversion rates. Leading agencies help teams select appropriate metrics for each test and configure tracking correctly.

Beyond primary conversion metrics, agencies track secondary and counter metrics. A test that increases trial sign-ups but decreases onboarding completion ultimately fails. A variant that improves mobile conversion but tanks desktop performance creates more problems than it solves. Comprehensive metric tracking reveals these trade-offs.

Many agencies implement custom event tracking that measures micro-conversions throughout the funnel. Rather than only tracking final conversion, they measure engagement indicators, video plays, feature exploration, pricing calculator usage, FAQ interactions. These intermediate metrics often provide earlier signals about variant performance and enable faster decision-making.

Statistical Rigor and Result Interpretation

One of the most valuable services top agencies provide involves preventing false positives and premature conclusions. Poorly implemented testing programs often generate misleading results that hurt more than help.

Agencies ensure tests run to statistical significance before declaring winners, typically using 95% confidence thresholds. They account for multiple comparison problems when testing many variants simultaneously. They recognize and mitigate various biases, seasonality effects, novelty effects, and selection bias.

For lower-traffic sites, agencies implement Bayesian testing approaches that provide probability distributions rather than binary significant/not-significant results. This enables better decision-making with limited data. For tests that take months to reach significance, agencies use sequential testing protocols that enable stopping early when results are clear without inflating false positive rates.

Case Study Patterns: Results from Real Implementations

While specific client results remain confidential, patterns emerge across projects that illustrate the value of experimentation-ready Webflow builds.

A typical engagement involves a B2B SaaS company with 50,000 monthly visitors and 2% trial sign-up rates. The company launches with a CRO-ready Webflow build that includes conditional variant systems and integrated testing tools. Over six months, the marketing team runs 12 tests across homepage, feature pages, and pricing.

The first homepage hero test compares three value proposition approaches. The winning variant emphasizes ROI and includes specific dollar figures, outperforming the original by 18% for trial sign-ups. The team promotes this variant to production, generating an estimated 180 additional trials monthly.

Subsequent tests optimize the trial sign-up form (reducing fields from seven to three improves completion by 31%), pricing page social proof (customer logos outperform testimonial quotes by 12%), and feature page structure (benefit-focused narrative beats feature grid by 22% for page engagement).

After six months, cumulative optimization improves overall trial sign-up rates from 2.0% to 2.9%, a 45% relative increase. At the company's average customer lifetime value of $12,000, this represents approximately $5.4 million in incremental annual revenue from the same traffic volume.

The infrastructure investment that enabled this outcome took approximately 15% additional project time compared to a standard Webflow build, roughly two weeks for a three-month project. The return on this investment materialized within the first quarter after launch.

.svg)

.svg)